We have a strict honest review policy, but please note that when you buy through our links, we may receive a commission. This is at no extra cost to you.

In this post, I’m going to show you how to create a newsletter in 7 simple steps. And at the end of it, you’ll find some bonus tips on how to create effective email marketing campaigns.

1. Organize your data

In order to send the most relevant e-newsletters that generate the most revenue, you need a high-quality mailing list.

But a lot of businesses have their contacts’ email addresses tucked away in a messy spreadsheet somewhere – or more likely, spread across several very messy spreadsheets.

It might even be the case that your email marketing data has been captured in physical format — for example, via application forms, surveys, customer files and other types of paperwork.

Regardless of where your email addresses are stored, it’s a good idea to digitize and consolidate all of them into one clean, well-organized spreadsheet before you try to send any newsletters to the contacts on them.

Then. it’s a good idea to ensure that this spreadsheet is segmented as well as possible — this means putting a field (column) in it that you can use to categorize subscribers.

For example, you could categorize your subscribers as:

- potential customers

- existing customers

- past customers.

How far you take this process is entirely up to you — you could, for example, segment your mailing list exhaustively, storing detailed information about product purchases, sign-up dates, demographics etc. in it.

Or you could keep things fairly simple and make do with a basic list of email addresses and lead types.

Either way, the basic aim of the exercise is to get your data ‘into shape’ — so that you are able to send the right message to the right person at the right time.

When you’ve finished your database preparation, you’ll have a spreadsheet that contains all your cleaned, segmented data in one place. This is your mailing list, which can now be imported to an email marketing app (I’ll discuss email marketing apps in just a moment).

⚠️ Remember: you need permission to email people!

Remember that you always need permission from your contacts to send them emails.

When compiling your mailing list, be aware of data protection laws, and only include people who have fully opted in to receive your emails. This is particularly important in an era of stricter data protection legislation like GDPR and CCPA.

2. Create your newsletter schedule

The next step is to plan your communications carefully. This means creating a newsletter schedule that maps out:

- what content you are going to put in your email newsletters

- who you are going to send them to

- when you are going to send them.

You can then refer to this schedule throughout the year — and ensure that you have all the necessary content ready to go well in advance of each mailout.

And, because you’ll have segmented your data in advance, you will be sending your e-newsletters to precisely the right group of recipients.

💡 Tip: use shared documents to manage your e-communications schedule

Email marketing often involves quite a lot of stakeholders — you may need text from one individual in your business, images from somebody else, sign-off from a manager and so on.

To manage this process, I generally suggest using an online productivity tool like Microsoft 365 or Google Workspace — both let you create shared an online document that you can use to plan and manage your e-communications schedule with others.

3. Pick the right email marketing app

Many small business owners still think that sending e-newsletters means compiling a list of email addresses, and then copying and pasting them into the BCC field of a clunky-looking Outlook message.

This is a very time-consuming way to go about things, and it’s also very ineffective, because it:

- doesn’t allow you to send very professional-looking e-newsletters

- prevents you from accessing important stats like open rates and clickthroughs

- increases the likelihood of your email triggering spam filters

- means that you’re not availing of sophisticated email marketing features like autoresponders or split testing.

Accordingly, it is a much better idea to use a dedicated email marketing tool for sending your e-newsletter — one that lets you send ‘HTML newsletters.’

HTML newsletters, as their name suggests, use HTML code to display text and graphics in an attractive way.

There are many web-based solutions you can use to send HTML newsletters: popular options include GetResponse, AWeber, Mailchimp and Campaign Monitor.

All the apps mentioned above allow you to:

- import the database that you created at the outset of this process

- make use of a range of attractive newsletter templates that will display correctly across all device types

- send out proper HTML e-newsletters that stand the greatest chance of being delivered (and crucially, read!).

Now, it’s important to note that these tools don’t require you to know anything about HTML code to use them. You simply use a drag and drop tool to design your newsletter, and the app writes all the necessary HTML code automatically for you.

💡Tip: there’s a free version of GetResponse available here.

Let’s move on now to newsletter templates.

4. Choose or design an e-newsletter template

Once you’ve decided on which email marketing app you’re going to use, you need to consider how your newsletters are going to look.

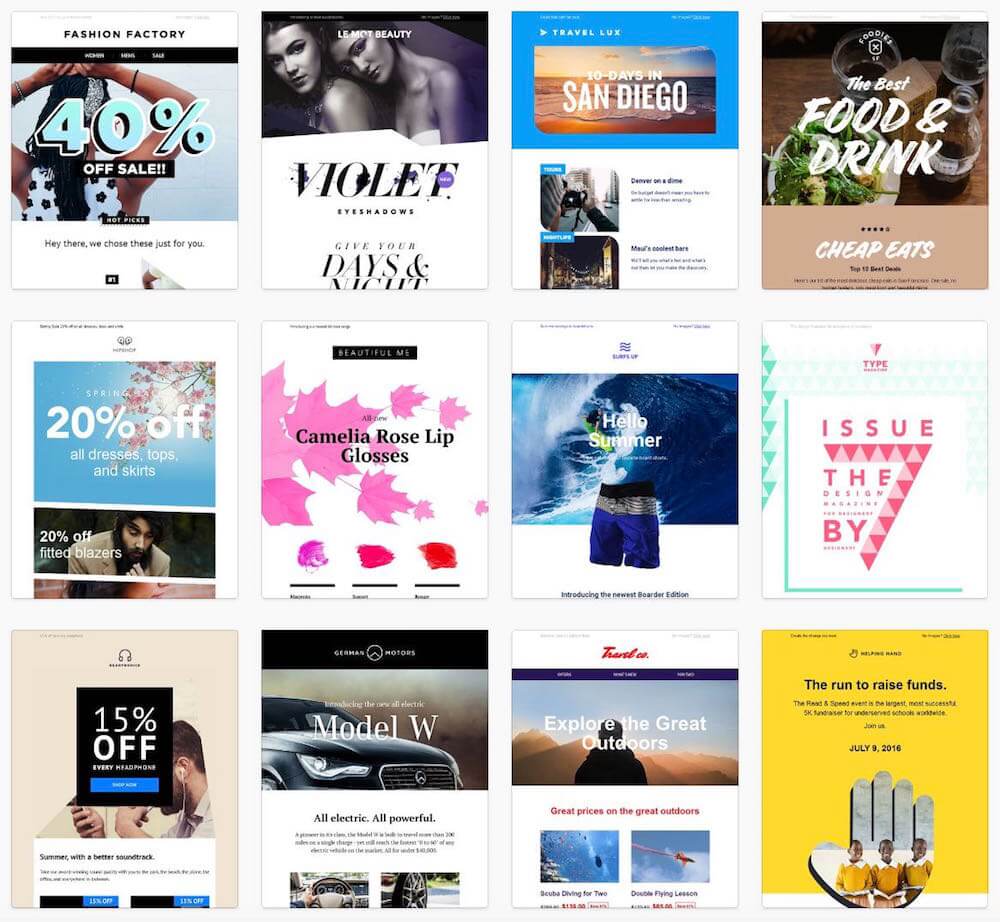

All the solutions mentioned above provide a wide range of professionally-designed newsletter templates that you can use as the starting point for your e-newsletter design.

When you’ve picked a template, you can then tweak its design elements using a drag and drop editor. This ensures that your e-newsletter design is consistent with your brand. You can then save this as your own template, and use it for future newsletters.

Now, if your design skills are not particularly strong, you could consider hiring a designer to code bespoke e-newsletter templates.

But in most cases, you should be absolutely fine with using one of the designs available from your email marketing app’s template library.

🤔 Do you need graphics, or will text do?

Something thing worth remembering when creating a newsletter is that you might not always need a graphics-filled template.

Sometimes simple text-based templates — i.e., ones that look like regular emails — convert better, because when these are used, your newsletter is perceived as less of an advert, and more of a personal communication.

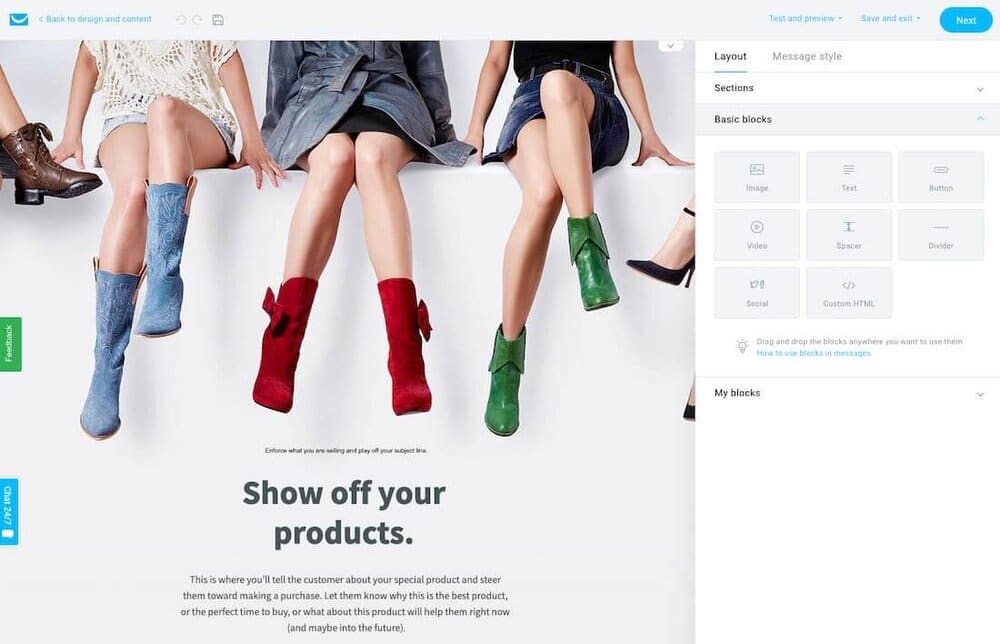

5. Add your content

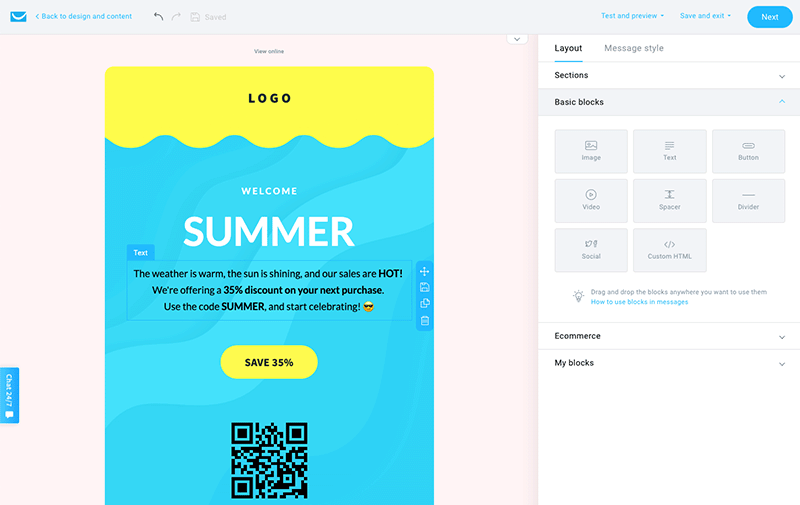

Once you’ve decided on a template, it’s time to add your content! Most of the major email marketing tools make it easy to do this — usually via a drag and drop editor (see screenshot below for an example of one being used to design a newsletter).

These drag and drop editors tend to be fairly user-friendly — if you’ve got experience of laying out content in Microsoft Word or Google Docs, you’ll probably be fine with using one.

Here are a few tips for adding content to your newsletter:

- Keep things short and to the point.

- Try to settle on one call-to-action (CTA) rather than lots of them — decide what you’d like your users to do and focus on crafting copy that encourages them to do precisely that.

- Ensure that any images you add to your newsletter are not too large in size (for most templates, an image width of 600-800 pixels is usually best). Newsletters that contain very large image files can get flagged as spam by email programs.

- Use buttons where appropriate to make it easier for users to click on your key calls-to-action.

- Use personalization tags where appropriate. Email marketing tools let you perform ‘mail merges’ that insert names, company names, product details and just about anything else into your newsletters (using tags that correspond to fields in your mailing list).

- Make your newsletters easily shareable — add forward to a friend buttons, social media icons etc. to them, and encourage readers to share your content.

- Add engaging preview text to your messages (this is the text that appears beside your subject line in an inbox).

6. Test your e-newsletters

By now you have:

a clean database

an e-communications schedule

an email marketing app

a template

- superb content

…so it’s nearly time to send your newsletter!

But before you do that, it’s really important to test it carefully. And there are generally three steps that need to be taken here.

Step 1: Check that your e-newsletter is arriving safely

The first test you’ll need to perform with any newsletter you create is a simple one — make sure that it is arriving safely in inboxes (and not in a spam folder!).

Professional email marketing solutions generally let you send test versions of your newsletters, so use this functionality to send a test message to a few different email programs – Gmail, Outlook, Yahoo etc. — and check that it’s not being routed into a junk folder.

Typically, apps that let you test newsletter deliverability also give you suggestions on how to improve it — follow them!

Step 2: Check that your e-newsletter is displaying correctly

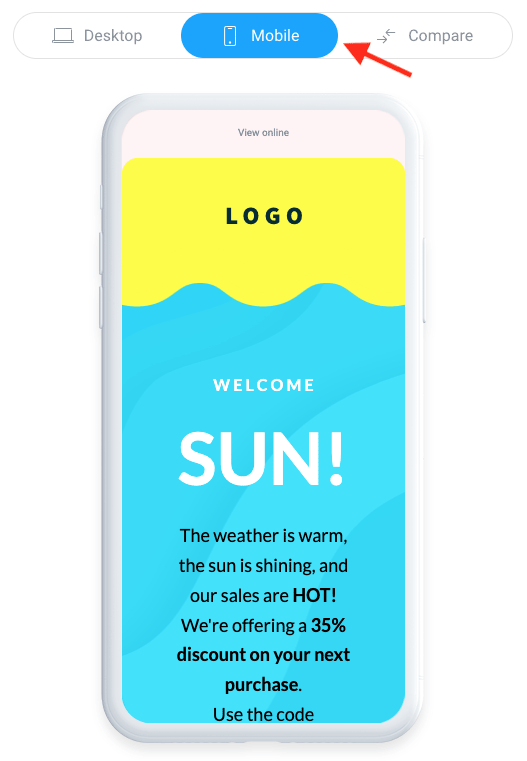

How your e-newsletters display in different contexts can vary considerably. Sometimes an email that looks great in Outlook can look terrible in Gmail, or the desktop version of a message can look fantastic, while the smartphone one is all messed up.

So, always check that your message is displaying as intended across a wide range of devices and email programs — and edit your template accordingly — before hitting the send button.

And finally, if you’ve used any personalization tags in your messages, ensure these are displaying correctly too. There’s nothing worse than an e-newsletter that starts ‘Dear [First Name]’…

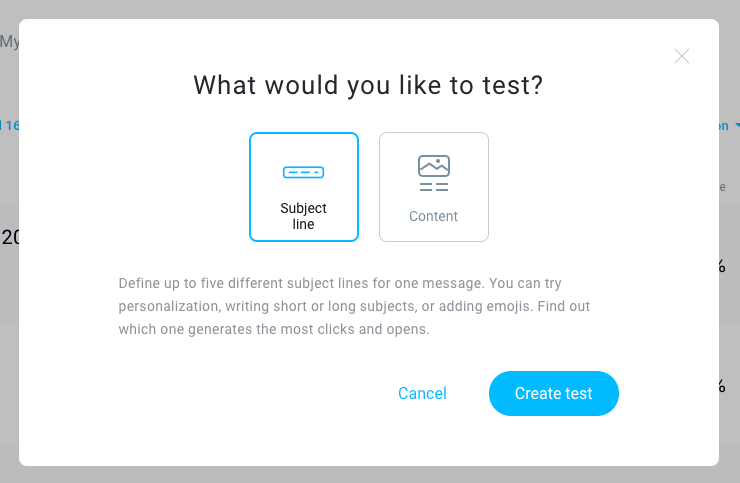

Step 3: Split test your messages

Split testing (also known as ‘A/B testing’) involves trying out different versions of your message on a small sample of your data before sending it to most subscribers on your list.

You could, for example, create three versions of the same newsletter, each with a different subject line, and send it to 1,000 people on your database. After a few hours, you’ll be able to identify which subject line led to the best open rate, and then send an email with the ‘winning’ subject line to the remainder of your list.

Helpfully, a lot of email marketing tools do this automatically for you.

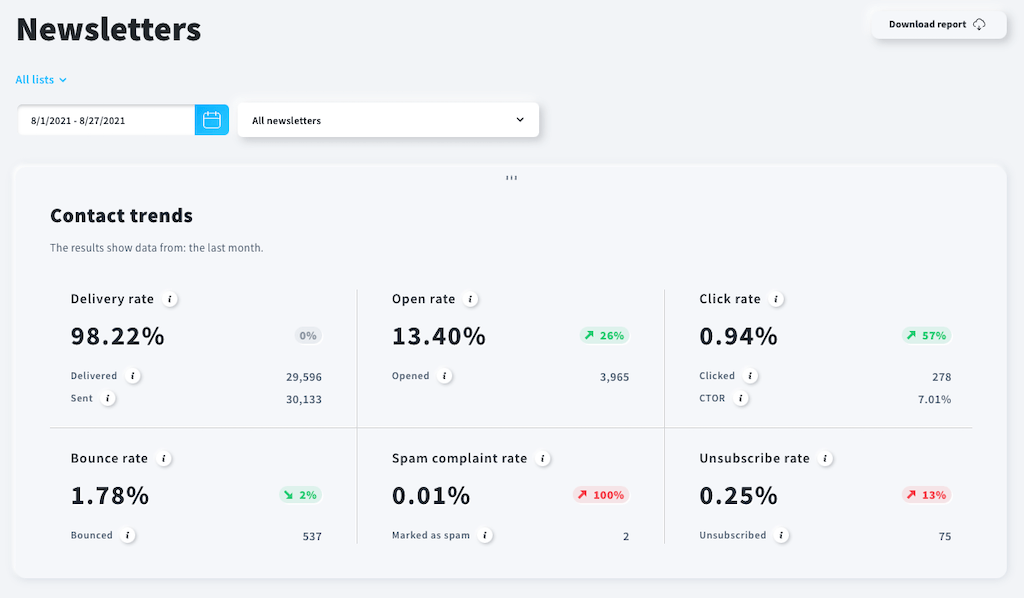

7. Measure success using email analytics

Most e-newsletter tools come with extensive reporting features – after sending an e-newsletter, you should be able to access statistics that let you measure the performance of your e-newsletters and email marketing campaigns.

The key things you usually need to look at are:

open rates

clickthrough rates (CTRs)

unsubscribe rates

You can use these statistics to help you create better e-newsletters that generate more conversions in future.

For example…

- you might notice that a particular type of email subject line results in more opens of your e-newsletters

- you might discover that buttons drive more clickthroughs to your site than text-based links (or vice versa)

- you might find that emails about certain topics lead to a lot of unsubscribes

- you might notice that a plain text email generates more sales than one filled with images.

- you might find that a particular sender name results in better conversion rates.

These sorts of findings can really help you understand what’s working or not with your e-newsletter campaigns — and ultimately improve their effectiveness.

💡Tip: because not everybody will open your newsletter at once, it’s best to leave a bit of a gap between sending your newsletter and reviewing the statistics for it.

Bonus email marketing tips

The above steps will help you send newsletters. But to create a great email marketing campaign, you’ll need to grow your list and communicate with your audience in smart ways.

Growing your list

To make the most of email marketing, you need to have a great (and growing!) list. Ways to do this include:

- making the most of ‘offline’ data capture opportunities (i.e., ensuring you are giving people the opportunity to sign up to your list on paperwork and at face-to-face events)

- incentivising data capture (i.e., giving people access to exclusive resources or tools when they sign up to your list)

- using popups

- creating engaging blog content that drives traffic and sign ups

- ensuring data capture forms are present throughout your site, and in the most effective places too.

For our full set of tips for increasing your subscriber count, do check out our full guide to growing an email list, or watch the video below.

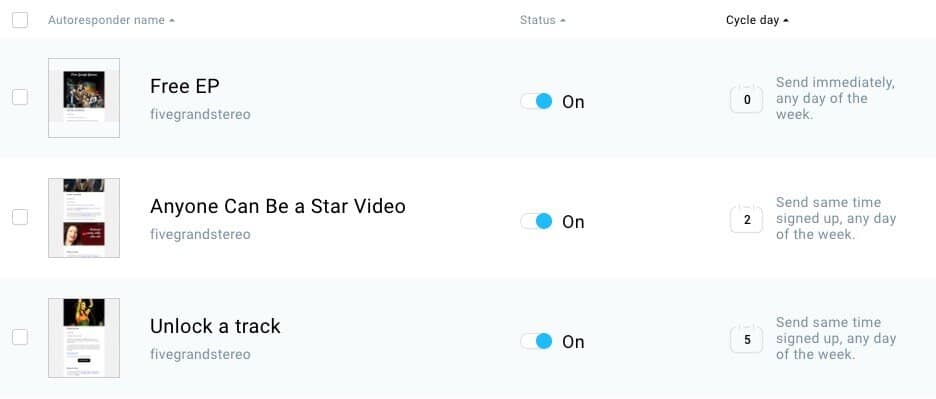

Using autoresponders

A fantastic way to send e-newsletters involves autoresponders or ‘drip’ email marketing campaigns — automated emails that you can program so that when somebody signs up to your mailing list, they automatically receive messages of your choosing, at intervals of your choosing, based on rules of your choosing.

For example, you can instruct your email marketing software to automatically send a follow-up email if a subscriber…

purchases a product

opens a particular email

clicks a particular link

visits a particular web page

.

You can learn more about how to send autoresponders here.

…and so on.

Follow best practice

Every time you create or send an e-newsletter, you should ensure that you are…

Obeying the law and

Not over-communicating with your subscribers.

Otherwise, you risk legal action, a high unsubscribe rate and your email marketing app being suspended.

Here are some key suggestions on how to follow best practice when marketing your business via email:

- When you capture email addresses, make it clear on any sign up forms and landing pages that people are subscribing to your mailing list.

- Include a link to your privacy policy on your website and data capture forms.

- Don’t spam: always ensure that anyone on your list has actually signed up to it.

Don’t over-commmunicate: leave decent gaps between messages.

- Always send relevant, interesting content to people on your mailing list: this will minimize unsubscribes.

- Always make it easy for people on your mailing list to unsubscribe.

- Add an email signature to your messages — this can reinforce your brand identity and build trust.

- Be very aware of data protection legislation (particularly GDPR and CCPA).

- Back up your mailing list regularly — so that if you ever lose access to your email marketing tool account, you’ll still have a copy of it!

- Ensure that your website can handle large volumes of traffic caused by newsletter blasts.

I hope you found these tips on how to create a newsletter useful — and that you’re now in a better place to create your own fantastic email marketing campaigns!

If you’ve got any questions about email marketing, just put them in the comments below — I’ll do my best to answer! And of course, do make sure you subscribe to our mailing list 🙂

How to create a newsletter FAQ

What’s the best app for sending newsletters?

Good options for sending e-newsletters include Mailchimp or GetResponse, AWeber and Campaign Monitor.

Can I make email newsletters for free?

Yes — several of the most popular email marketing apps now let you send email newsletters for free to small lists (500-2,000 subscribers). Well-known solutions that provide users with a free account include Mailchimp, AWeber and GetResponse.

How do I create an interesting newsletter?

The key to creating an interesting newsletter is to give your readers valuable content rather than a sales pitch. By sharing relevant tips, resources and information with your subscribers, you can keep them engaged, drive more traffic to your site and ultimately generate more sales.

How do I get more people to subscribe to my newsletters?

The two main ways to increase subscribers to your mailing list are through online advertising (where you run ads that offer something in exchange for an email address) or through creating great blog content that draws people to your website (where your site visitors are given the opportunity to sign up to your mailing list). If using the second approach, always ensure that your mailing list sign-up forms are highly visible and consider using popups or exit-intent forms to maximize the number of email addresses you capture.

Can I create a free e-newsletter in Word?

Yes — but it’s not a great idea to do so! Microsoft Word is an app that is primarily designed for creating printed materials, and doesn’t give you the sophisticated e-newsletter creation and sending tools that a dedicated email app equips you with.

Email marketing tool reviews and comparisons

You may also find our email marketing reviews and comparisons helpful:

Comments (5)

Hi Chris, really useful article. I’m in the process of buying a SaaS ERP system for my small business. The best ERP provider only has integrations to Squarespace, Shopify and Mailchimp for email automation (& marketing). As I need more advanced marketing functions (e.g. social posting scheduling etc), I assume that Mailchimp is the best option, rather than Squarespace or Shopify, if I want more marketing automation? Thxs Malcolm

Hi there Malcolm, thanks for the kind words about the post! Yes, Mailchimp would have significantly more automation capabilities than either Squarespace or Shopify (at this time anyway). Squarespace has some automation features, but not particularly advanced ones.

One thing I’d say though is that the advanced automation features in Mailchimp are more to do with scheduling newsletters and creating subscriber journeys, rather than social media posts.

Hope this helps?

Great how-to. I believe GetResponse have the most user-friendly platform when it comes to creating and sending newsletters.

Some marketing services like Remail.io suggest their own templates for email marketing, I used couple of them but still had to add what I thought was right for my audience.

great overview and list! thank you! -Sharon www.MountainWisdomWholisticHealth.com